The making of

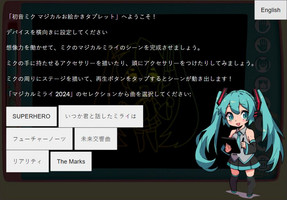

“Hatsune Miku’s Magical Drawing Tablet” is an interactive toy where you can draw accessories and the stage for Hatsune Miku to dance to the music while she sings and the lyrics are displayed on screen. All 6 songs from the Magical Mirai 2004 selection are available. You can play online here

Following is the journey of its creation.

May 16

While looking for info about the Magical Mirai concerts, and thinking I’ll likely never visit, I found out about the Programming Contest. Maybe this is the way I’ll be able to “be there” somehow?

I tried to figure out details, and had some initial ideas like Miku looking at the clouds and the lyrics appearing in the clouds.

It’s a webapp, with Javascript, so it should be something perfect for me.

May 17

I saw my daughters’ drawing tablet in the morning (the kind of “cheap” tablets they give as a gift at kids parties) and I knew I had a solid idea: to simulate the lyrics being drawn on it, and also have some “monochrome” low frame animations of Miku dancing and maybe some environment around.

Looking around for stuff, finally managed to get things working using the sample app.

Had two goals for today: connect to the API and be able to get characters in the screen sync with the music, and maybe having a proof of concept of the visuals for the magic tablet simulation

The idea is to have a rainbow gradient in the background and a black texture on top, and then render the lyrics and the animation in a mask applied to the black texture, so that we can see thru the rainbow background, ideally it wouldn’t be a sharp mask but instead smooth borders.

Discussed the idea with several friends, Armyboy is interested and his contributions will probably be super important to accompany the lyrics with some cute low-frame-rate chibi dancing miku doodles.

Initially was thinking on using PIXI with some webGL phasers, but decided, at least for now, to go with a simpler route with SVG and CSS (!) it’s actually works pretty great, not sure if will stay like this or just to prototype.

There’s still a lot of work to do to organize all the DOM elements so that there can be a single container that can be freely moved around and scaled and everything stays in place. I’ll also have to change the entire lyrics processing to do it character by character instead of word by word, and find a workaround for the lack of text wrapping from native SVG 1.0.

May 20

Player around a lot with DOM to have everything under a component that can be scaled and transformed as needed.

Still need to work a little bit in the positioning, which is a bit tricky when combining flex and scaling transforms.

Also invested a little bit in the infrastructure, set up a dev server and improved the webpack setup

Next up will be improving the word processing so that instead of having a animate function for all the words, we are checking for new words on every frame, and position them individually (the idea is to place them a bit irregularly in terms of line position, rotation and scaling, so they look a bit more organic).

Also thinking that maybe the default should be landscape instead of portrait, but not sure about it. Might want to have portrait only, and some cool background.

Have also been listening to the tracks, and checking out the lyrics, especially Future Notes, to get some inspiration for the animated Miku doodles.

Mirai Mirai Mirai

May 21

Worked on text rendering character by character, and also manually handling spaces between words for both Japanese (using moments of time when there are no words) and English (detecting words properly)

Peeked a bit into “beat” detection, and used it to flip the test doodle so she dances to the music.

Also worked a bit in the visuals, took advantage of the work done on having the entire drawing tablet as a DOM element that can be transformed, so it’s a bit rotated now (theoretically we could do much more with it), and edited the drawing tablet a little bit so it’s now branded as a Hatsune Miku thing (using art from the Piapro characters page, which I understand is allowed). I also added some anime clouds in the background, which I’m not sure if are going to make it to the end but want to get a feeling of it.

Talked with Armyboy today, he’s going to start working in some “poses” for the characters, since there is no official choreography video for it, we’ll just have to use our imagination as well as references from past Magical Mirai performances.

May 31

Got some ideas from Armyboy yesterday, it’s still not his work I think but a reference of a half-chibi Miku style we could follow.

Needed to test how it would look, especially regarding line width, so I put it into the program. Then I figured I could work a little bit more in something that I’d been plotting for a while now: adding a bit of simulated personality to the character.

So, I evolved the simple “flip to the beat” animation I had set up. The character now has three layers (body, eyes and mouth), and they are updated independently, with the body cycling thru a 3 frames yoyo animation to the beat, the mouth toggling between a closed and two open positions based on the words spoken, and the eyes changing between phrases.

I also updated the background so it’s now a desk. Still trying some ideas.

June 14

Got an original animation from Armyboy! it needs some tweaks on the line width and the positioning of eyes and mouth, but it´s looking good! had to change the animation logic to have multiple frames per beat.

June 15

Migrated to TypeScript so that the project is better structured, and refactored the animations logic into an Animation class. Also included facial tracking support so that the eyes and mouth can be placed correctly as the head moves around.

July 3

All these days wondering about the interactive part of the thing. Implemented some stupid reaction/rhythm thing where you have to touch a leek that pops out randomly to the beat.

Went to bed late, thinking on what kind of interactivity I could introduce, in the end the obvious answer was clear: the players should be able to draw in the tablet, there’s no escape from it.

July 4

Discussed a bit with friends about how to implement drawing, my initial idea was to use mouse/touch drag events to draw circles or lines over a canvas, then, every frame, transform the raster image data of the canvas to base 64 and load it into an image in the mask

July 5

However, in the end, I decided to try something else for the drawing implementation, instead of meddling with raster data, I created a “Pencil” object which creates SVG Path elements in connection with mouseMove events. It works!

Talked with Army, what else do we need? maybe more animations, or a better background?

Family stuff happened during the afternoon, however I kept thinking on this and I think I have a finalized idea of the interactive aspects: the player will be able to draw accessories for Miku, and they will move along as she dances

July 7

We are running out of time. I implemented a lot of boring code to support mobile events, and changed it to be landscape instead to make better use of the screen space.

It’s a last minute thing but… I decided to contact back Alzarac and ask him if he could jump into the project to make something that would be super important: redesign the “physical” part of the tablet to make it our own.

End of day and after a lot of iteration, we had this:

July 8, Final Day for the submission

Of course there was a big rush to the goal line. While I continued iterating with Alcaraz on the tablet image, I implemented Japanese localization, song selection with a tutorial screen, and base64 image preloading so that it played web over Internet in mobile devices. I probably did a lot of other stuff that I didn’t manage to track, because it was crazy 😀

August 7

Unfortunately, our entry was not selected between the 10 finalists. I still packaged it nicely and put it up on itch.io for people to enjoy. At least my daughter and her schoolmates seem to really enjoy it so I’ll take that as a grand prize 🙂

I did some final tweaks now that we are outside of the contest; used the “bolder” Miku animation, increased the character limit to 25 and reduced the strength of the blur effect. As much as I tried to “fix” the head tracking, it doesn’t seem to be possible as the head seems to rotate and warp, so each element (eyes and mouth) would have to be tracked separately.

Leave a comment

Log in with itch.io to leave a comment.